Targeted Phishing Against a High-Value Open Source Maintainer

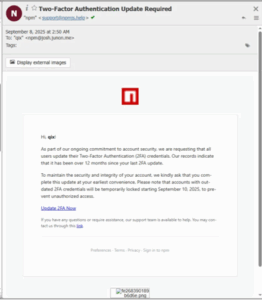

This week, the largest Supply chain hack in history caused massive fallout. On September 8th, John Junon, a Javascript developer, received an email allegedly from npm (Node Package Manager) saying that all their users should update their 2FA, and a link for that was provided. The email also said that if this was not done by September 10th, the account would be locked for security measures. John saw the message while he was using his phone, got worried, and clicked the link to avoid problems later. He then opened a URL.

Figure 1: The phishing email received by the developer

Here, two things stand out: the URL is very similar to the real one (npmjs.com or npmjs.org), and it contained the victim’s username (“qix”), which shows the attack was targeted. The big problem is that John is the maintainer of dozens of JavaScript packages used by many applications worldwide (collectively more than 2 billion downloads per week), so compromising his account had an enormous impact.

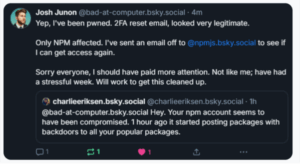

The result was that many of those packages were updated with a backdoor. This is a supply-chain attack: instead of attacking the final product directly, the attacker targets libraries or components that the product depends on; and this might be the largest supply chain incident in history. In this case, any application that pulled the malicious new versions would also be compromised, including development builds running on developers’ machines. Imagine malicious code written to propagate itself across packages, hijack developer accounts to reach more victims, and install persistence or destructive payloads (ransomware, for example). That would be catastrophic for large parts of the web.

Figure 2: Sample of the packages maintained by Josh Junon

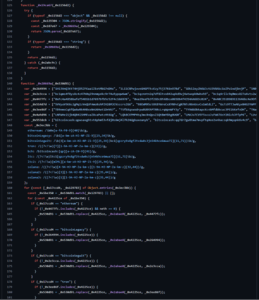

However, instead of going for a destructive payload, the attacker embedded a crypto stealer whose purpose was solely to hijack cryptocurrency operations. The malicious code was designed to run in browser-like environments and hooked important functions like ‘request’, ‘fetch’, and ‘XMLHttpRequest.prototype.send’. Those functions were modified so that requests involving cryptocurrency addresses had their recipient changed to attacker-controlled addresses. The attacker used nearly 300 hardcoded replacement addresses and an algorithm to pick the address that looked most similar to the original, which makes casual manual checks less reliable.

Because the payload targets browser globals like ‘window.ethereum’, it activates mainly when a user loads a web page or a web-based app that includes the compromised code and has a web3 wallet connected. But because the malicious code ran at module evaluation time and referenced things like ‘fetch’ without proper guards, it also caused failures in CI/CD and build systems that imported those packages, even though those environments were not the intended theft target. The incident therefore disrupted many development pipelines and forced a rapid global cleanup.

Figure 3: Part of the code containing the attacker’s cryptocurrency addresses

Put simply, the attacker phished a maintainer, used the stolen credentials to publish poisoned updates, and those updates were pulled into client bundles and developer environments. Financial theft was small (a few dollars of ETH and token), but the operational cost and security fallout were massive: teams worldwide had to triage, rebuild and rotate secrets. Treat this as a reminder: the weakest link is often the human, and maintainers are high-value targets.

Conclusion

This case proves that a single successful phishing message to a maintainer can impact millions of users and dozens of orgs downstream. Phishing simulations – realistic templates, targeted scenarios, and follow-up training, are a core defensive control.

For more information on how we can support your business, get in touch today – info@istormsolutions.co.uk or call 01789 608708. Our team can help you build resilience against phishing and other social engineering threats through tailored awareness programmes, ongoing phishing simulations, and incident response readiness planning.

Author

Nicholas Ferreira, Penetration Testing Consultant, CREST CRT & CPSA, OSCP, CEH